The Grey Areas of AI Decision Making

- Melodena Stephens

- Feb 3, 2023

- 3 min read

AI decision making is complex. It is a mixture of human and machine decisions. A machine does not train itself, nor does it decide its decision boundary - humans do. Humans create data, feed the machine data, accept the data outputs and often choose (willingly or unknowingly) to accept the data or the decision the machine churns out. They don't question the data or the process (sometimes because they don't understand it).

Think of these situations - you are driving and your car GPS plots an optimized route. You see the traffic ahead but decide the machine is not wrong.

You key in a search term, you get the top ten results, you assume the top result should be the best and certainly you are not going to look at page 20 of the results.

You walk on a street and the the zebra crossing light shows green for you - you continue to walk (even though there is a malfunction).

You go to the grocery store and the bill is $17.19, you swipe your credit card, assuming the tally should be correct.

Of course these are simple examples. But everyday we default decision making to an AI. So AI ethics is about human choice - should they have the right to choose and be aware of which decisions are being made for them?

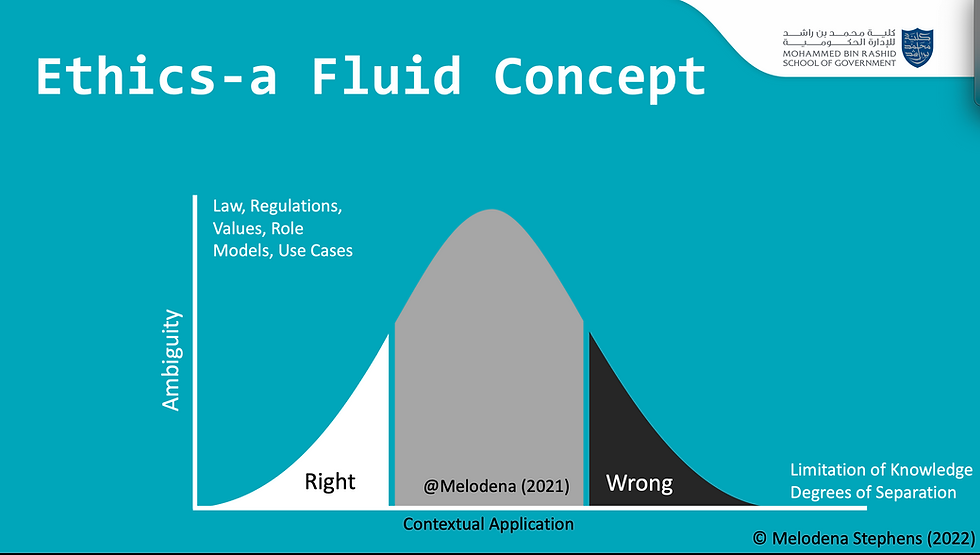

Real life may be far more complicated that the examples I gave. Most AI decisions are in grey areas where right and wrong are not clear.

You wish to create a program to optimise limited resources in a hospital during a COVID, for example: Who gets the oxygen - what are the parameters you key in? Should everyone get a little oxygen to be fair and transparent (and most likely every dies), or do you prioritise the people based on normal life expectancy - son their age? Maybe chances of survival? Money? Gender? Pregnant since it is really two lives? [this is just a simple example to illustrate a point]

Who makes these decisions?

Someone has to. Too often an old redundant research paper is pulled up (as in the Appriss case) and used to program the AI. Or some smart young person decides new knowledge is better than experience as was the case with the UK AI exam grading during COVID. Or even worse, a bunch of senior managers decide that the only way forward is AI (without a clue what it means and the implication of this decision on the business model, the people and in terms of future value). It is estimated that 60-80% of AI projects fail. AI systems are greedy and need to constantly be fed new data (hopefully representative of the sample for whom the decisions are being made), and AI systems need constant upgrading and protection (more on this later).

Not to be pessimistic, but we need to balance the huge progress and possibilities of AI systems (think Chat GP) with societal skills (or lack of skills) and concerns. AI is there in banking, government services, mobility solutions, health care, entertainment.....and everyday, we produce more and more digital data that can create more AI business use-cases. Are you letting the human know you are using their digital data to create a new system? Chat GPT had a 100 million users in two months after its launch - think of the data those 100 million users created.

Does this mean it comes down to the ethics of AI system development, deployment, and adoption? A lot is being said about how AI will augment human intelligence and create more jobs. Too often it is being used to substitute human jobs and dampen human intelligence. If tomorrow, the cashier system does not work - would the teller still be able to register your purchases, manage stock and calculate your total? Will a student trained entirely with an AI be able to complete a surgery from memory if there is an electrical failure and all backup systems are down?

AI ethics shows in your transparency in decision making on sustainability of the supply chain (not just carbon footprint, data storage/centres, but sourcing AI, e-waste and jobs lost). Ethics shows in your decisions - do you choose the human or the machine (why are you laying off staff?). Do you choose communities and respect their way of life or are you imposing your way of life on communities across the world? Do you protect the planet or do you protect your shareholder wealth (and your bonus and stock options)?

Yes, AI is a super cool tool that can change humanity - but let's not throw out everything of the old way of life without understanding why. Pause, think, and reflect. Shades of grey can go both ways - good and bad.

Comments